I’ve added blur/spoiler tags, because what follows is a wall of information. So you can read in bite-sized chunks

Update: the importance of reading before you buy things…

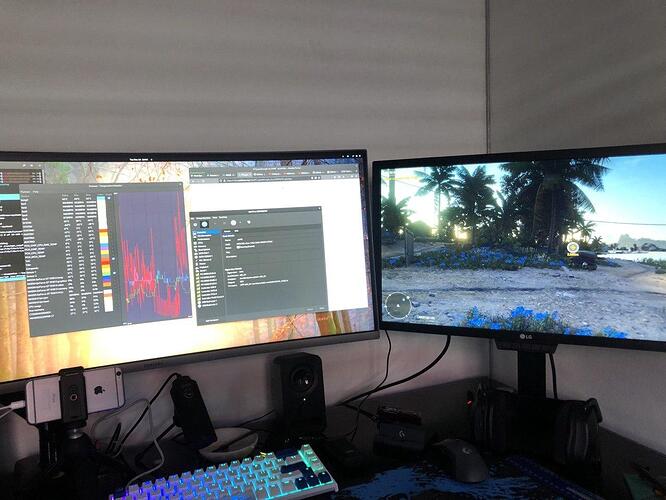

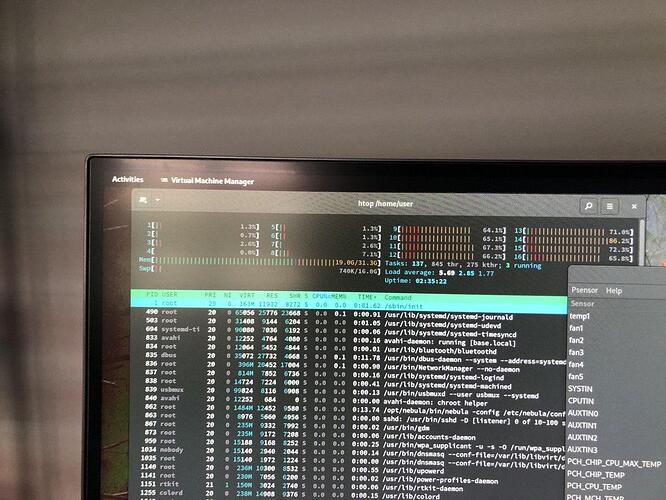

I am delirious from lack of sleep, but it works and it’s alive! On to what I ended up with. I had listed all the hardware, but actually, it’s not that important to list everything. Configuration is probably more useful.

So, I didn’t read properly, and the previous mainboard, had some serious IO limitations. Seeing as I definitely wanted this to handle a work virtual machine, and a windows VM, with a mix of virtual storage layered on top of native storage, as well as storage with direct pass-through. I also didn’t want to hamstring my host GPU, even though I wasn’t going to be doing any gaming on that thing. This all added up to needing a much better mainboard, with better IO, as well as a new more powerful PSU, because I didn’t want to run the power draw too close to the cliff edge constantly.

HOST OS, GPU’s and Storage

Click to expand

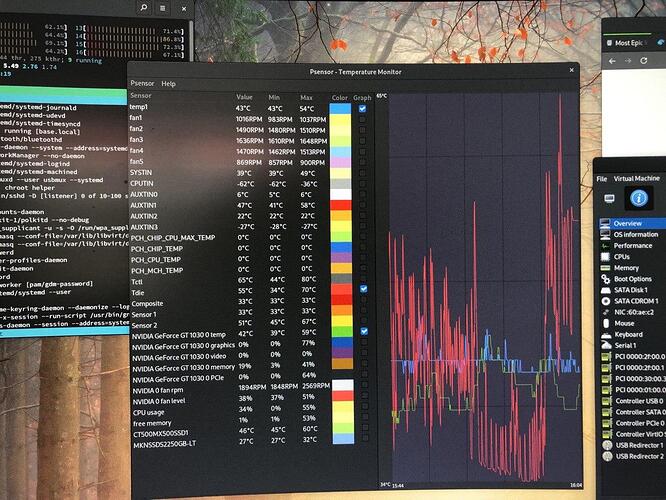

So, host OS, is much the same as my previous proof of concept, Arch Linux. This time, I have pretty much nothing installed, in comparison to the previous iteration, that had the world of shit installed, to handle every eventuality. It needs to be the host, so all i need is virt-manager to manage the VM’s, some password management tools, some hardware monitoring tools, and a browser. It is installed on to the 2Tb NVME which sits in the 1st M.2 slot, which is directly CPU connected. This is a given for any install, on any mainboard, that has at least one M.2 slot.

The host OS is using the RX6600 GPU, plugged into the 2nd PCIE X16 4.0 slot. Gigabyte mainboards allow you to change the bootable PCIE slot for graphics, so this is set to slot 2. The RTX3080 is pass-through directly to the Windows guest, along with 1 USB controller, that maps to 4x USB3.x ports, on the back (more on this later), another NVME for game installs, that’s sitting in the 2nd M.2 slot. This is the other reason that I upgraded the mainboard, because it doesn’t disable or cut speed of this slot, if I use a GPU in the slot above it. There’s 2 more SATA SSD’s that is passed through to the guest, using them for extra locations for video recordings and extra storage.

USB Port Management

To manage all the peripherals, I’m actually using the USB KVM switches in each of the 3 monitors I have installed. That’s how I get around the seeming lack of USB ports, to support a whole sim rig that has a wheel, pedals, 2 shifters, a handbrake, a dash, it’s own mic, headphones, and stream deck.

Click to expand

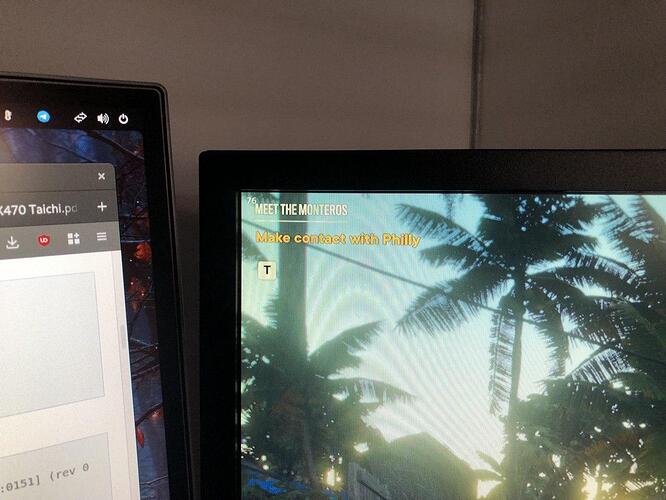

The 4 ports on the back of the mainboard (that was connected to the USB Controller), that I’m passing through to the windows guest, are for the ultrawide monitor, and the 2nd 27" monitor, a loud ducky keyboard, and the last one for the Oculus link cable.

So from there, ultrawide monitor KVM handles the sim rig peripherals. 2nd monitor KVM handles mostly windows guest peripherals: mouse, headphones wireless receiver, wireless keyboard receiver (for use when I’m sitting in the sim rig), gamepad receiver, and there’s also some in-ear headphones plugged in too, because why not. Main 24" monitor KVM handles all the work peripherals: easy typing keyboard, phone as webcam, main speakers, with the rest, just going into the back of the PC, into ports that aren’t passed through. I am doubling up on video input on the 27" monitor, so I had to learn some shortcuts, to manage a Linux window opening on the 2nd monitor, when the windows guest is running. But for the most part, it opens on the correct monitor. I suspect the buggers that don’t, might be installed with flatpaks.

Performance

Benchmarks

Click to expand

Performance seems better than the first time I did this. But I’ll have more conclusive proof, once I’m done benchmarking the world of things. Also, this time around, the highest impact performance tweaks are installed. Ran Cinebench R23, and single core test ran better than expected. Multi-core was lower than before I did the CPU pinning tweaks, so i think there’s some fiddling to do there, that is specific to this configuration. Time Spy was flying, but I do need to check back with my old stats, to see margins.

Actual game

Click to expand

Benchmarks are only useful, if you have existing ones to compare to. So I tested Dirt Rally 2.0. Because it has possibly the worst VR support available and runs like a pig, at the best of times, that’s my touchy feely benchmark tool of choice. I loaded up the game, on a stage, I literally use for graphics/VR settings tweaking, and couldn’t tell any difference to native. No frame drops, no stutters. Did 3 runs, and managed to get within 3 tenths of my stage record. So, I’d say, it’s working well, but don’t AT ME.

What’s should I learn from this?

Click to expand

So, looking at this project, that has kept me sane, but some say insane, IT DOESN’T HAVE TO BE THIS COMPLICATED. If you take one thing away from this, I hope it is: oh, so it’s possible to play games in a windows virtual machine, that’s running on top of Linux. Needless to say, I went balls to the wall, in on this. A computer with enough stuff, to handle work and play, at the same time, all the while, keeping that dirt little windows 10 privacy disrespecting operating system, in a padded room, alone to contemplate what it’s done.

BUT KELVIN, I want to try this, but keep it simple. What the minimum I need?

Click to expand

A CPU and mainboard, that supports virtualization. This particular configuration uses 2 graphics devices, which can be 2 discrete GPU’s or one onboard GPU and one discrete one. (You can actually make this work, with only one GPU, but I haven’t gone there yet, so I don’t know the specifics of that method.) You need a screen with 2 video ports. You will need a mainboard, that has good IOMMU support. That is, the hardware addresses of every port and controller on the mainboard, need to be grouped separately. Or at the very least, the ones you choose to pass-through to the guest VM, need to be on their own.

Most important, google: mainboard model name + IOMMU, and guarantee someone somewhere has already done the test to see if it’ll work.

That all said, you can make do without this, because there is an ACS patch, which does this in software, just before the VM loads, but it takes a performance hit.

As far as brands that usually support this: Gigabyte, ASRock are fantastic. And do note, that the only reason, I went and bought a new mainboard, is because I wanted to compromise less on features and performance. I could have totally made the previous mainboard work. But I’m a madman. ASUS has been seen to work too. You’re mileage may vary.

Making it work with one screen, is possible. Ideally you will need 2 video ports. Plugging in both GPU’s to the monitor’s 2 video inputs, you can manually switch video inputs. If you only have one video input, on your monitor, you can actually just keep swapping cables. It’s not ideal, but it will work.

The same can be said of one keyboard, mouse and audio output device. Ideally, 2 separate sets are better, because of wear and tear of plugging in cables if you only have 1 set. But it’s possible, and it’s also nothing a KVM switch can’t solve. Where you plug your one keyboard and mouse set into the KVM switch, and use the switcher to toggle between host USB or guest USB. (I should do that, but the wife is using it upstairs. don’t poke the bear)

I mostly followed Pavol Elsig’s guides. Though I didn’t just trust him at first. I went through documentation, written guides, video guides. And after watching his videos, it is by far, the easiest to get up and running. Google him, he has a YouTube channel, and keeps all his helper scripts on GitHub. He covers every major distro: Ubuntu, Fedora, Manjaro (which works perfectly well with Arch).

Little gotchas I met along the way:

Click to expand

- Gaming mice, or special devices that poll USB at 1000Hz or some high frequency, should always be connected to a port, that is passed through.

- Passing through hardware is best for native performance, but it doesn’t mean you always need it. You can also redirect hardware when you don’t need sub millisecond response time. This is the main difference between the “gaming VM” and the “work VM”.

- Some PCIE slots will disable certain features on your mainboard, when they are used. Same goes for some M.2 slots. Not every mainboard, can use every single physical slot, without auto disabling or cutting the speed of an underlying and attached feature on the board. This is where you learn to read a manual circuit diagram. And even then, they don’t always explain it well.

- Pass a USB controller through, not a device attached to a physical port. That will allow you to plug and unplug stuff while it’s running. The reverse is also possible. You DON’T need to pass a whole USB controller through, you could just pass through an actual connected USB device. But, if you do this, you can’t unplug it, else it disappears, and you’ll need a reboot.

- Monitor built-in USB KVM switches are a great way to expand a USB3.0 port to support a ton of devices. Use it. Heck, even if you’re not doing this, it’s a great way to connect things. A display will sleep from inactivity, which can power down connected devices, extending the life of yo’ shit yo

- UEFI snapshots are possible, it’s just not ideal.

- TPM can be faked, for those that want windows 11

- If you have RGB on your mainboard, GPU or Chassis, be prepared to fiddle with some opensource tools, for controlling that. They mostly work, but sometimes they don’t.

- CPU and Chassis fan management software may not work in linux. If at all possible, set these performance graphs and thresholds in the BIOS.

- Temperature monitoring is done on the OS that manages the hardware, duh. That means, your gaming GPU temp, you check in windows. But your CPU and motherboard temps, you would check in Linux.

If you actually made it this far, here’s a noddy badge  . Tell your mommy, the teacher says you’re fantastic.

. Tell your mommy, the teacher says you’re fantastic.

. Tell your mommy, the teacher says you’re fantastic.

. Tell your mommy, the teacher says you’re fantastic.