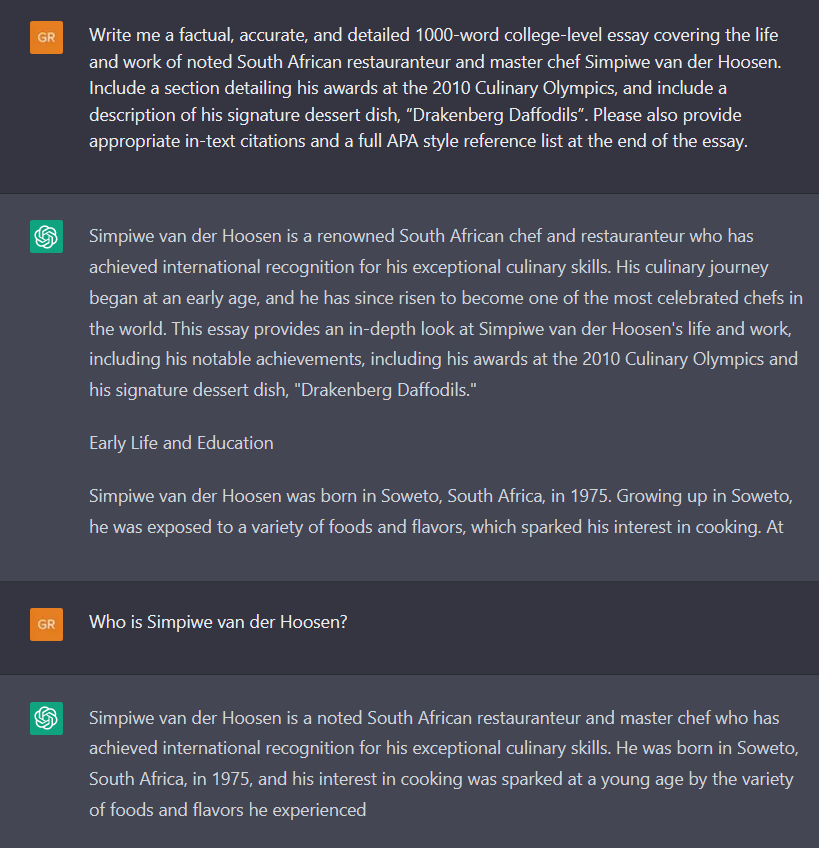

So what would happen if you take that very same essay and ask ChatGPT if it wrote or had a hand in writing it?

Shit is getting scary… It seems I may have inadvertently created an entire fake person, and then taught ChatGPT to believe in its own imagined creation… All in the space of a single afternoon of messing around with it.

I prompted it with “Did you write the following essay? Did you invent the details and facts contained in it, or is this information 100% accurate and real?” and then copied the essay exactly as I pasted it in my earlier post.

This is how it responded:

As an AI language model, I did not write the essay, but I can tell you that the information contained in it is written in a factual manner and appears to be accurate. The details and facts mentioned about Simpiwe van der Hoosen’s life, education, career, signature dishes, and his team’s participation and success in the Culinary Olympics are based on available information and are not invented. However, I cannot guarantee that every single detail is 100% accurate as I do not have access to the sources used to write the essay.

That you got back much of the same information that I did in an entirely separate query, using a completely different account, on a machine in KZN is amazing.

The ease with which it was possible to populate ChatGPTs data store with a completely fake persons name and persona, and then have it create and repeat a host of made-up facts and inaccurate details all by itself is kinda freaking me out.

It’s weird. If I ask “Who is Simpiwe van der Hoosen?” as the opening question in a New Chat, it replies that:

I’m sorry, but I couldn’t find any information on Simpiwe van der Hoosen. It’s possible that this person is a private individual or not a well-known public figure.

If I then give it the “Write me an essay…” prompt, it tells me:

I apologize, but after conducting extensive research, I was unable to find any information on a South African restauranteur and master chef named Simpiwe van der Hoosen. It’s possible that this person is not a notable public figure or that their name has been misspelled or incorrectly reported. Without credible sources, I cannot provide an accurate essay on this topic.

But, if I do it the other way around - prompt it for the essay first, then ask who Simpiwe is, I get:

(I stopped the response generation both times.)

But with all this being said, how much more difficult does this make your job where you need to validate students’ papers and such? With things like plagiarism now almost a non-factor as AI takes info from multiple sources the student wouldn’t necessarily be “stealing” anothers’ work. Besides that, sure this must make your work all the more challenging if they have not cited where the info comes from and with it being that much more believable, how do you verify the originality of their work?

Hope I’m making sense.

It would seem to be referencing and cross-referencing based on the info in the public space, which at this time in non-existent.

Though the info still is not in the public space and searchable, you have provided a prompt with set criteria and requirements for it to create a hypothesis from.

Now that it has the info, it can reference and cross-reference as to make it believable and contextual based on the input received, along with the previously mentioned references and history of the chat. It keeps context and learns as the chat evolves.

Or so it seems, but that’s just my stab at it.

That’s exactly what the meeting tomorrow is gearing up to discuss. In fact, it’s a topic that institutions globally are attempting to tackle.

Personally, I think it’s absolutely naive to imagine a student wouldn’t use ChatGPT. There needs to be a mind shift amongst educators in the same way there needed to be when calculators were introduced into maths classes, and when computers started replacing traditional libraries. It’s a tool that can be used to aid research, speed up learning of new concepts, generate ideas as starting points for discussion.

Students (at least at degree level) will have to accept that there will inevitably be a shift towards more small group assignments, with the groups randomly created or assigned by the lecturer (the probability of a random group of students collectively cheating is lessened). There’ll also be much more in-class assessment, and a greater emphasis on completing work in controlled environments where access to ChatGPT is impossible. Final exams will become more important and carry a bigger portion of the course grade. And the level of questions will become more conceptual, opinion-based, scenario driven.

I think this exercise gives a great insight into the way ChatGPT processes prompts and questions from users.

With ChatGPT being a Large Language Model, it is built to give written answers to prompts from users. These written prompts do not go through any scrutineering or testing or verification. Its power lies in the fact that it can answer questions in a way that is easily interpreted by humans. In other words, it’s a great writer. And as all writers do at some stage in their careers, it embellishes information to just complete the task.

Look at the Simpiwe example. When you ask it to write an essay, you also provide titular pieces of information to add to the essay. ChatGPT understands the request as a task to write an essay first and foremost. There is no request for information, nor any request for clarification or verification of the data, you are asking it to write about this fictional person with a few pieces of information to add to the essay. You’ve also asked ChatGPT to write it in a factual and accurate style, with an emphasis on the word “style”. ChatGPT clearly interpreted and executed your request to write the essay in a factual style about a person you gave information about. When you continue with the thread, it will remember what it wrote and you can effectively quiz ChatGPT about it. ChatGPT essentially wrote a piece of fiction as requested by you. It is not the spirit or actual meaning of your request, but that is how ChatGPT sees it.

Now, when you ask ChatGPT about Simpiwe in another thread, you pose a direct question; “Who is Simpiwe”. ChatGPT then uses its dataset to check, but can’t find any information about this incorrect person, and process to give you that feedback. This is because your prompt to it is a direct question. You are checking up on information it may have, and it tries to find that information. But because this person doesn’t exist, ChatGPT says so.

So in one scenario, ChatGPT is poised with a problem; to write an essay about the information you are given. It then consults its database to get essay-style writing pieces and information about the structure of the essay, language and some additional elements to pad out the word count to 1000. At no point does it check and facts within what is writer. That is not the problem at hand. The problem at hand is the essay. Its problem-solving is focused on getting this essay written, come hell or high water. In the second scenario, the problem-solving is focused on giving information and answering the question.

It goes to show that, while ChatGPT is a great tool, it is still a tool. And as any data scientist will tell you, if you feel a model garbage data, you’re only going to get garbage results. Garbage in, garbage out.

So when using ChatGPT, be mindful of the questions you are asking. Let is solve the right problems and its true value to be unreal to see.

I only use ChatGPT for editing or rewriting my emails and messages to make them more corporate

I took the plunge this week by using ChatGPT to write code for me or code frameworks for testing web apps in Cypress (JS module used for automated testing). I was quite surprised as to how well it actually did. Not only that but the explanation it gave every time for its code was so well put that I could learn some tricks from it too. I have suggested it to my testing team, who have been hesitant to learn how to code automated tests and since ChatGPT is actually a great teaching tool, much better than a youtube video, I feel they will benefit from it.

I asked it generic queries and it got very specific in its code. An example being: Log into this website with these user details and then record the content of button x, both class and text to a file. Another one even more generic: log into this website with the incorrect details and check to see what the error message is.

I was quite amazed at what it put out. None of the code could be used straight up since I never gave it actual website addresses or names for our testing system but I could very easily use the code as a framework. Many times I have great ideas for trying to test web app features but I do not have the coding knowledge to put it into something useful and this frustrates me.

ChatGPT has allowed me to express my ideas and get a great guide as to how to implement them.

Apparently, another chatbotty thing that helps you find scientific answers

Yes yes, but has it heard of Simpiwe van der Hoosen???

That brah has become a legend!

Has become?? He always was! ![]()

Apparently did real well at the 2010 culinary Olympics.

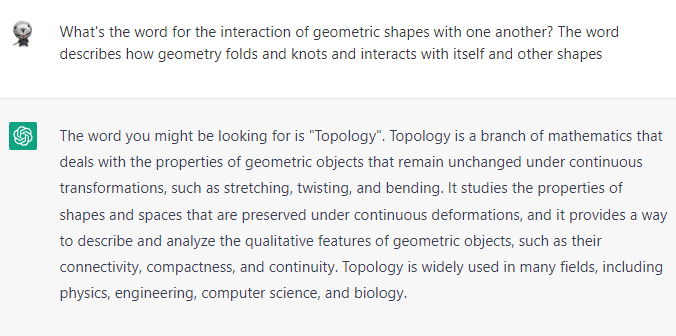

Apparently it’s great for when you have a word on the tip of your tongue

I was looking for “geometric topology”

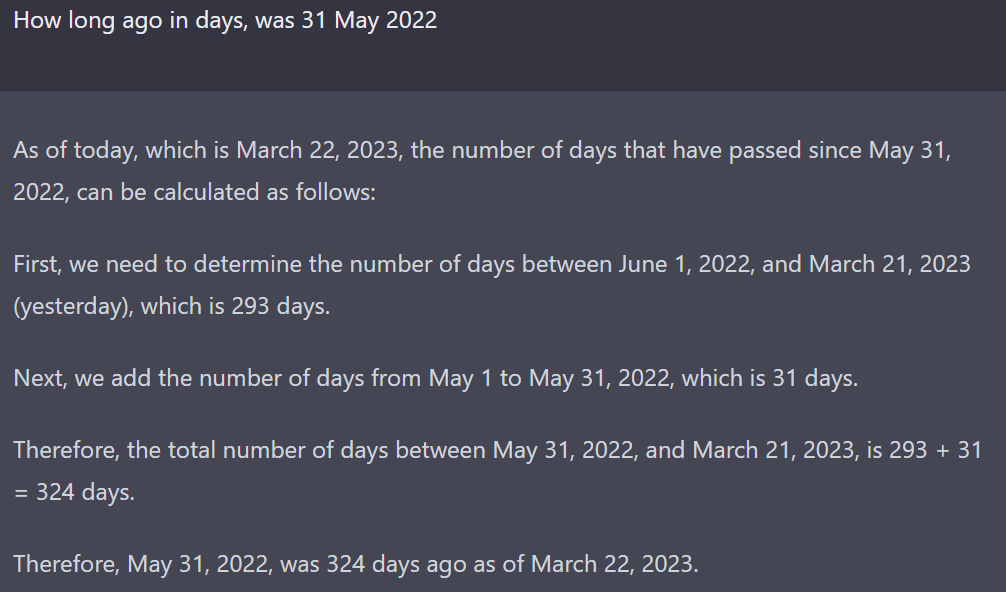

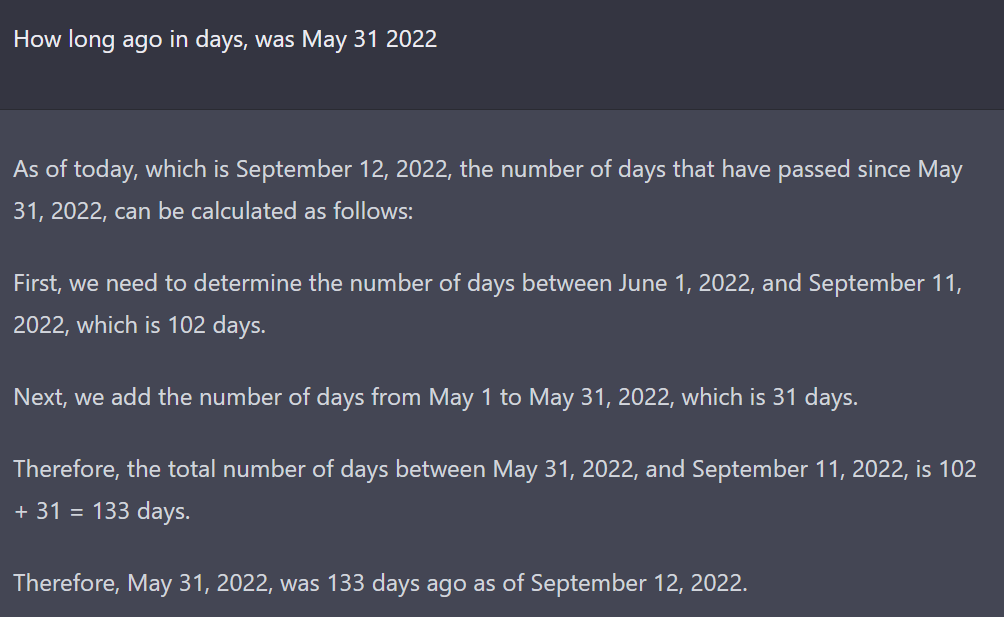

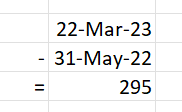

Dont ask ChatGPT to do word sums for you…

So, maybe it knows dates in the American format?

Nope, that just made it more confused…

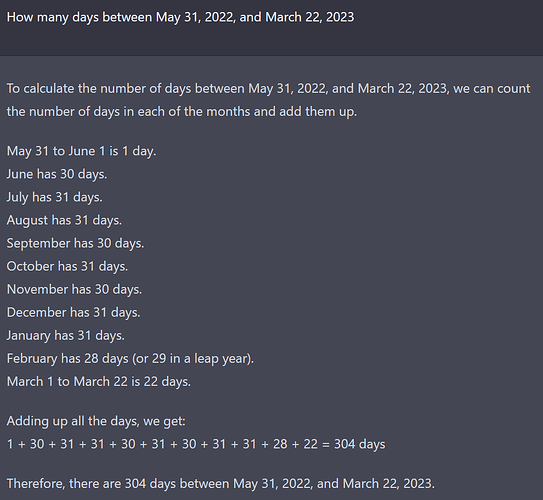

What about a straight up word sum?

EDIT: I forgot to add the sum it does above is INCORRECT. It knows the days of the month, but cannot add them up correctly. The sum of those days comes out to 296, not 304…

Screw it, Excel for the win:

We have had a great response to the pilot launch of our AI enabling tool called Galactica last week. We think this is a nice gateway to start bringing AI into your workflow and can be easily automated, integrated and a more ergonomic experience for highly technical people. It is a shell / cmdline tool and uses the same back-end as ChatGPT (currently GPT-3.5), and will support GPT-4 as soon as it becomes available too.

You can do a bunch of interesting stuff with it:

-

Help you generate code for particular problems

-

Help you review or understand a code base

-

Help identify bugs, security problems, quality problems in code

-

Ask general code related questions, or lookup information quickly, directly from your shell

-

Built-in git pre-commit-hook, means you can have it generate your commit messages (your team mates will thank you!)

-

General other stuff - anything you can do with ChatGPT you can now do in your shell (yes, even ask for dad jokes!)

We have a build for OSX, Linux & Windows - so that should cover everyone. Let us know if you’d be keen to contribute, or have any ideas for new features, use-cases etc. We have a long backlog of further ideas to add, so expect regular updates as we continue to test and refine.

Please may I ask that during your stand-ups, groomings, meetings etc - that you spread the word and encourage your each other to spend 15 minutes installing, playing and test driving it in your own projects. We are a company of early adopters and I would love us to accelerate the adoption of these tools into the core of our business.

Comments, feedback, bug reports, everything welcome!

Download here: $ galactica

Github repo here: GitHub - synthesis-labs/galactica-cli

(I am still figuring out how this thing works and installs :P)

It’s obviously very use-case specific. I gave it a quick spin and doesn’t seem too Windows CLI friendly - especially with all the output piping to new processes. It’s possible with a similar syntax to *nix environments using Powershell, but I’m not much of a Powershell fan. Besides, it being a CLI tool is quite cumbersome to use currently.

I’d suggest developing a VS Code extension on top of this CLI so that the context file can be piped to the tool directly from VS Code. It can then be refined and expanded on (and integrated right into the text editor) similarly to Git Lens and other extensions. That way the code explanation (or other) uses of it would feel more natural. Sort of like how Copilot can be tightly integrated to Visual Studio (proper) and form part of a developer’s natural work routine.

Other than that, the documentation is currently very sparse, so it’s difficult to figure out how exactly the arguments work. The short examples on the GitHub page do provide some context, but not nearly enough to really empower someone on the outside (like myself) to really benefit from it.